Dependency relation

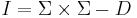

In mathematics and computer science, a dependency relation is a binary relation that is finite, symmetric, and reflexive; i.e. a finite tolerance relation. That is, it is a finite set of ordered pairs  , such that

, such that

- If

then

then  (symmetric)

(symmetric) - If

is an element of the set on which the relation is defined, then

is an element of the set on which the relation is defined, then  (reflexive)

(reflexive)

In general, dependency relations are not transitive; thus, they generalize the notion of an equivalence relation by discarding transitivity.

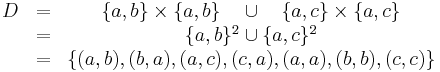

Let  denote the alphabet of all the letters of

denote the alphabet of all the letters of  . Then the independency induced by

. Then the independency induced by  is the binary relation

is the binary relation

That is, the independency is the set of all ordered pairs that are not in  . Clearly, the independency is symmetric and irreflexive.

. Clearly, the independency is symmetric and irreflexive.

The pairs  and

and  , or the triple

, or the triple  (with

(with  induced by

induced by  ) are sometimes called the concurrent alphabet or the reliance alphabet.

) are sometimes called the concurrent alphabet or the reliance alphabet.

The pairs of letters in an independency relation induce an equivalence relation on the free monoid of all possible strings of finite length. The elements of the equivalence classes induced by the independency are called traces, and are studied in trace theory.

Examples

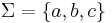

Consider the alphabet  . A possible dependency relation is

. A possible dependency relation is

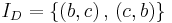

The corresponding independency is

Therefore, the letters  commute, or are independent of one-another.

commute, or are independent of one-another.